In this 2 part blog series, we will explore the following topics.

- What is cloud gaming?

- How does cloud gaming work?

- Which performance metrics matter, and how can you objectively define a good cloud gaming experience?

- Importance of low latency

- How can end-end user latency be measured?

- How can you break down latency?

- How does cloud gaming compare with native gaming?

What is cloud gaming?

Cloud gaming is a technology that allows a user to play video games on any device without installing them on local hardware. Instead of running the games on a local device, users stream them from remote servers with powerful processors and graphics cards. This allows users to enjoy high-quality games without worrying about storage space, compatibility issues or performance problems. Cloud gaming has several pros and cons;

Pros

- No need to download/install game files or updates. The game is instantly available to play.

- It can deliver high-quality, high-resolution graphics and up to 120 FPS, even on low-powered hardware.

- It uses considerably fewer system resources, leading to longer play times on battery-powered devices.

- As the game code runs on a secure remote server, there is far less opportunity for cheating in multiplayer games.

Cons

- Higher latency

- Requires a good internet connection

- Limited games library

- It uses video compression, so the image quality is not as good as the original. Although with modern compression codecs, this difference in quality is mostly imperceivable.

Depending on an individual’s use case, the cost could be considered a pro or a con, so it has been left out of the above.

How does cloud gaming work?

Cloud gaming works like video-on-demand services such as YouTube or Netflix. You need a client, a low-powered device that can connect to the internet. You also need software in the client that lets you access the cloud gaming service. The client software sends your inputs (such as keyboard presses, mouse clicks or controller movements) to the server where the game runs. The server processes your inputs, renders a new frame, and sends the video and audio output back to your device.

However, unlike video-on-demand services, cloud gaming is extremely sensitive to input latency. As a result, they cannot rely on buffering and other latency-inducing techniques to smooth over network or device hitches.

Characterising gaming experience

The following aspects must be measured from a gameplay perspective to characterise the gaming experience.

Responsiveness

Metrics that capture how gameplay responsiveness. Input Latency is the key metric to get a good understanding of responsiveness.

Fluidity

The gamer must also see a minimum number of frames every second to perceive the experience as smooth or fluid. The higher the number of frames, the better the experience for the end gamer. Frames per second (FPS), frame rate variability and frame time percentiles are good metrics to capture to indicate the smoothness of the experience.

Visual Quality

Modern graphics cards can render games at 4K resolution, enabling gamers to view special effects designed by the game studio in great detail. With cloud gaming, providers must ensure they can maintain a high-resolution video for as long as possible. During peak times, the network might be fully loaded, forcing cloud gaming providers to adapt the streamed resolution dynamically.

Gameplay duration for portable devices

For mobile phones, a key promise of cloud gaming is the ability to game for extended periods. As a result, we need a way to measure the impact of the client software on the battery.

Please find a link to a recent blog about how these aspects were used to benchmark cloud gaming on Chromebooks.

Metrics that capture the gaming experience

Input Latency

This is also called input lag/input-to-action latency. It is the time between a physical input (e.g., pressing a gun muzzle) and the corresponding action on the screen. This is measured in ms (milliseconds). For example, the time from the mouse press to the first frame holding the gunfire (Figure 1).

Latency can directly impact a gamer’s performance, especially in first-person shooter and racing genres. The best explanation of how it can affect gameplay is to imagine you are playing a first-person shooter and looking down at the sights of your gun. You are waiting for an enemy to run past the centre of your scope, and when they do, you click ‘fire’. If the latency between seeing the enemy and the click registered by the game engine is significant enough, you will have missed the enemy, even though you pressed fire at the precise moment you saw the enemy at the centre of your scope.

With cloud gaming, input latency is a crucial metric to track to ensure the user gets a good experience. As the game rendering happens on the cloud, every input event needs to be sent to the cloud game server and the processed video frame sent back to the client.

Fire button pressed Fire button pressed |

Fire animation rendered Fire animation rendered |

||

| Figure 1 | |||

Frames per second

This is defined as the number of frames rendered in one second. Modern high-end mobile devices can render 120 frames per second to give the user a fluid experience. It’s essential to understand and differentiate between the frame rate of the video stream and the frame rate observed by the end user. This link shows a helpful animation showing the effect of different frame rates and their impact on end-user experience.

Image Resolution

Cloud gaming has come a long way since it was launched. GeForce Now can stream at 4K (3840×2160) and 120 frames per second. The network conditions play a significant role in ensuring the platform provider maintains that resolution for the entire gaming session. If the bandwidth available to the user reduces, the cloud gaming service usually returns to a lower resolution. It’s essential to ensure you can measure Image Resolution to quantify the fidelity of the graphics experienced.

The following video gives a good illustration of the detail perceived by the gamer at different resolutions.

Gameplay Hours

Lastly, it’s crucial to understand how much time you can game on a portable device when using a cloud gaming service. It’s well known that native games (that use the client’s hardware resources to render) have a higher current drain leading to quicker battery depletion. We need to know the battery capacity (in mAh) and the average current drain during the gaming session to estimate this. We can then divide the battery capacity by the current drain to estimate the possible gameplay hours on the device.

Why does latency matter more for cloud gaming?

In traditional games, Frames Per Second (FPS) is the core metric for measuring a gamer’s experience. Latency does have an impact, but more often than not, it’s a product of FPS, so the higher the FPS, the better the latency. As an example, an excellent modern game engine will have approximately 2-3 frames of latency. So for a game running at 60fps, each frame will take 16.67ms, which means the user latency will be approximately 33-50ms.

Cloud gaming differs because the FPS delivered to the user has a relatively consistent value of 30, 60 or 120. Assuming the user has a network connection with the required bandwidth and minimal packet loss, the user will get a consistent and smooth experience. However, latency can be much larger than playing the same game locally on the user’s device. This is because other components add to the end-end latency.

- Network latency: The time it takes for a data packet to travel from client to server and back

- Encode/decode latency: The time it takes to compress and decompress the video stream.

- Buffer latency: Networking buffers used to store and reassemble packets into frames will incur an additional latency

- Misc latency: As well as the significant parts mentioned above, there will be additional latencies due to intermediate code and additional memory copies needed.

As a result, Cloud Gaming will always have a noticeable latency impact compared to a locally rendered version of the same game. However, depending on the user’s device, Cloud Gaming can deliver lower latencies, as it can provide 120fps gaming to devices that do not have the raw performance to render at those speeds locally; more on that in our next part.

Translating metrics to experience ratings

We have covered the different metrics that impact end gamer experience. This section will examine how we translate the measured metrics to a performance rating.

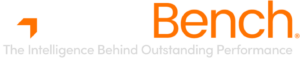

Input Latency Rating

The figure below shows how to convert latency readings to a colour-coded rating system. E.g., a user observing a median latency of over 150 ms with a standard deviation of more than 16.6 ms will perceive the experience to be poor quality.

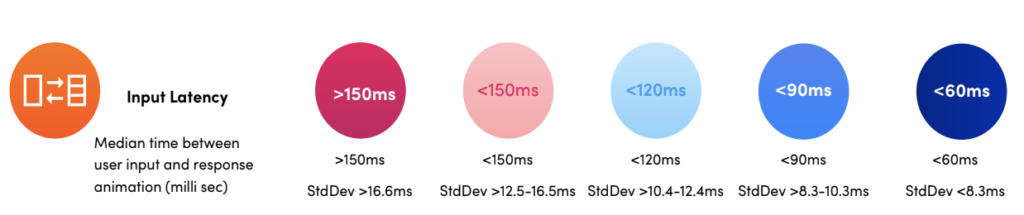

FPS ratings

The figure below shows how we can convert FPS readings (along with Minimum FR and the variability of the frame rate) to a colour-coded rating system. E.g., a user observing a median frame rate (FR) of less than 20 will perceive the experience as poor.

Battery ratings

The figure below shows the conversion to a battery rating from GamePlay hours. A device that can only stream a cloud game for <3 From a power consumption standpoint, hrs from a full charge is considered a poor device.

Visual Quality ratings

We currently don’t have a rating system for visual quality. Still, a good indicator is to understand if a gaming session could maintain the highest resolution supported for that device. E.g., if you are using Geforce Now over a mobile network, can it stream at 1080p and 120 fps for the entire gaming session?

How can the latency be measured?

There are several ways to measure the input-to-action latency of a cloud gaming service. The following outlines the three popular ways to do this;

1. Using a High-Speed Camera

One of the simplest ways to measure input-to-action latency is to use a high-speed camera that can capture at least 120 frames per second (fps). Ideally, you need the camera to record at least twice the frame rate of the cloud gaming stream’s FPS. For instance, if you use GeForce Now with a 120 fps setting, you need at least a 240 FPS camera, ideally even higher.

You can use any high-speed camera device, such as a smartphone or a DSLR. The idea is to simultaneously record both your input device (such as a controller or a keyboard) and your display device (such as a monitor or a TV) and then count the number of frames between your input and the game’s reaction. To make the process less cumbersome, you can “instrument” your input controller to light up a led when the button is pressed.

For example, if you press the fire button on your controller and see the bullet on your screen after six frames, and your camera is recording at 120 fps, your input-to-action latency is 6/120 = 0.05 seconds or 50 milliseconds. This method is easy to set up and can give you a rough latency estimate. Still, it may need to be more accurate or consistent due to factors such as camera quality, lighting conditions, or human error. It’s tough to obtain many measurements from different locations without enormous effort.

This video shows this method in action.

2. Using an NVIDIA Reflex Monitor

Another way to measure input-to-action latency is using an NVIDIA Reflex monitor with a built-in latency analyser. An NVIDIA Reflex monitor has a unique port that connects to your input device and can detect a graphical change at a specific point on the screen. It can calculate the input-to-action latency by measuring the time between an input event and a difference on the screen.

This method is more accurate and reliable than using a high-speed camera. Still, it requires a compatible monitor and input device and only allows the user to take a single sample at a time. The user has to note down the reading manually, and there is no automated way to send all the measured data to storage.

3. Using GameBench Tools

A third way to measure input-to-action latency is to use GameBench tools designed for performance testing and analysis of games across different platforms and networks. GameBench offers various tools and services for game developers, publishers, network operators, device manufacturers, and gamers who want to measure and improve their gaming experience.

One of the tools that GameBench offers is called  , an automated cross-platform tool for network testing and analysis. ProNet can measure real-world, end-to-end user latency for both native and cloud gaming applications. It can also provide comparative network performance data and quantitative user experience measurement.

, an automated cross-platform tool for network testing and analysis. ProNet can measure real-world, end-to-end user latency for both native and cloud gaming applications. It can also provide comparative network performance data and quantitative user experience measurement.

Using ProNet, you can measure latency without any specialised hardware requirement and correlate if the network metrics like jitter and packet loss play a part in end gamer performance metrics.

To use this method to measure input-to-action latency, you must install ProNet on your Windows or Android device and launch the game you want to test. Then, you can use PrioNet’s dashboard or web portal to view the latency metrics for your gaming session.

Get in touch with us if you’d like to try out ProNet.

In the next part of this series, we will break down the latency into individual components and also talk about some performance optimisations that cloud gaming providers rely on. Stay tuned!

Performance IQ by GameBench is a hassle-free, one-minute read that keeps you abreast of precisely what you need to know. Every two weeks, right to your inbox. Here’s a quick and easy sign up for your personal invite to the sharp end of great gamer experience.

And of course, get in touch anytime for an informal chat about your performance needs.

![]() The intelligence behind outstanding performance

The intelligence behind outstanding performance